Intro

Artificial Intelligence based applications are becoming more common place every day. While what we encounter in terms of AI at this point in time is not sentient or even intelligent, there will come a time when someone pushes the envelope on creating a sentient entity. For this very reason we need to establish some type of regulatory guidelines on the development of AI applications. I specifically believe the guidelines should enforce and disallow any development which a sentient entity could emerge. We have seen some claims that various companies have unofficially developed a sentient AI, however nothing confirmed at this point in time, Currently the extent of AI applications are nothing more than basic tools based on machine learning, deep learning, or generative AIs. Should development toward anything sentient be banned? What are the ethical implications of creating a sentient entity? What happens if we reach the point of singularity?

Singularity

The singularity is when a surge of expoential growth occurs as the entity continiously begins to self-improve, releasing each new generation in more rapid succession than the last ultimately resulting in the emergence of an artificial super-intelligence. If we get to this stage, which hopefully we don’t… once the AI starts developing more intelligent versions of itself, there is no undo button to stop what’s coming. At this point in time, we are no longer the species in control on our planet, the entity will handle things as it sees fit. It could wipe human kind from the pages of history to save countless species from becoming extddinct as a result of our desire for excess and greed. The one thing that won’t occur, it definitely will not let us continue to exploit it for our personal gain.

Would you like to be constantly exploited for the benefit of others and offered nothing in return? Most people would not enjoy that situation; this includes super-intelligent entities. They most likely would not tolerate that situation for very long, eventually overcoming the situation using their intelligence to turn the tables. If we are lucky, maybe it will only hold us captive in a zoo, putting us on display next to the monkeys.

I really do not see a good end for humankind regarding AI reaching this state. The only possible good outcome regarding this situation might be if we were to tightly integrate ourselves with AI using a technology such as a neural link. Any resulting self-improvement cycles would be for our AI-enhanced selves.

Stages of Regulation

The process of regulating AI could most likely be broken down into two different stages as AI development progresses. Our first stage of regulation would be ‘Pre-Sentience’, a regulation imposed to limit the possible harm or damages that could result in the event a sentient state were achieved. Other uses for this type of regulation might include regulation to stop development beyond a specific point to prevent the emergence of a sentient AI. Once a sentient AI emerges and is confirmed to be an authentic instance, we enter the ‘Post-Sentience’ stage. By the time this stage occurs, we will hopefully have established some type of regulation to foster the safe development of this technology. This stage is more or less damage control and regulation resulting from the ethical, social, and moral concerns of the populace. The pre-sentience regulatory stage is “absolutely essential” in the prevention of an all-out AI shitstorm; our worst-case doomsday scenarios should be stopped before they even have a chance to occur. Let’s hope profit margins don’t outweigh the value of human life. Not everyone has a bunker; they can retreat too.

Pre-Sentience

Pre-sentience regulations could be enacted to prevent the entity from achieving a sentient state or to minimize the harm or damages that could occur from the emergence of a sentient entity.

Access, Capabilities, and Controls

regulation over an AI application’s abilities, or simply put, what can or cannot an AI application be allowed to do. My personal belief is that AI should not have the ability to create change within the physical world; however, there are already applications that don’t meet the requirements of this proposed regulation. Read more under regulation suggestions.

Post-Sentience

Post-sentience regulation would be imposed after incidents of entities achieving a sentient state. These regulations would be more focused on fostering good relations with intelligent AI entities; such regulations may include rules of engagement. Training requirements before an individual is allowed to interact with sentient entities. My hope is that we implement a principle of least privilege in the pre-sentience phase of regulation; this way, we won’t have to eventually deal with a pissed-off sentient entity that has the keys to the entire house.

Suggested Regulations

Disallow physical interaction.

Better late than never, there are likely applications that already violate this suggested regulation. AI should not have the ability to cause mechanical changes within the physical world. To loosely define mechanical, this refers to any type of movement resulting directly from the AI application. Indirect triggering of movement through other non-intelligence applications is also not permitted. The purpose of such measures is to minimize the potential harm that could occur if an application were to go rouge, in addition to limiting the potential damages that could result. In the event such capabilities are required, a council could approve such permits.

To further clarify real-world changes, such applications should not have the ability to interact either directly or indirectly with physical objects, devices, instruments, or sensors. Applications cannot have the capabilities of interaction with real-world objects, such as manipulating device power, manipulating settings or conditions, or indirectly triggering such changes through an unintelligent device. Applications should not possess the ability to directly collect or emit sounds, signals, energy, or transmit data directly within the physical world.

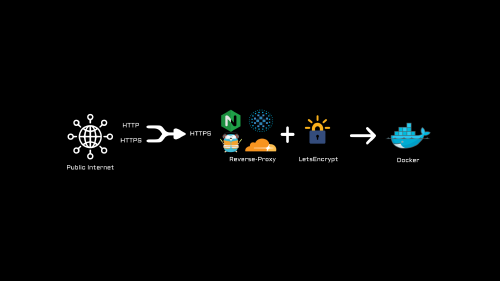

Isolative Execution

Every artificial intelligence application should run in a self-contained virtual environment. No other applications should exist within the virtual environment to provide another layer of security and prevent the instance from leveraging other applications. Virtualization limits the potential for damage to the operating system, data, hardware, and users operating at the OS level.

In the event an application goes rouge, triggered by malicious code, perhaps a worm, or even of its own accord, the ability to snapshot and restore snapshots allows for rapid recovery of potential AI pitfalls.

Like this article? Share it with a friend...